How ChatGPT fails at data analysis

It works in theory, but not in (today's) practice

Dear finance executive,

There’s so much talk and promise about LLMs like ChatGPT helping with data analysis, that I thought I’d try it myself on real data.

The result: Off-the-shelf ChatGPT works in theory, but not in practice.

Here is my experimental setup:

ChatGPT 4o (which uses Python programming for analysis)

Real-world data: 200 rows of financial and non-financial survey data across 225 dimensions, semi-cleaned with gaps and even some html code artifacts

What works well

Let’s start with the positives. ChatGPT’s technical ability is amazing:

ChatGPT is able to perform advanced statistical analysis on large data sets

You can perform analysis using natural language: Multivariate regression, ANOVA, PCA, IQR… and if you don’t know what these acronyms mean, the explanation is just a prompt away

It works well for data cleaning: I could easily do advanced “search and replace/clean” or outlier detection and removal (“remove everything that looks like survey coding or html from dimension x” “remove everything that starts with x and ends with y”)

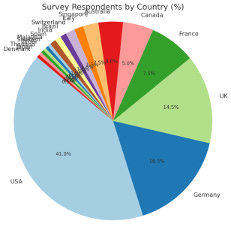

It already works well for simple analysis (“show me the distribution of the data by country”)

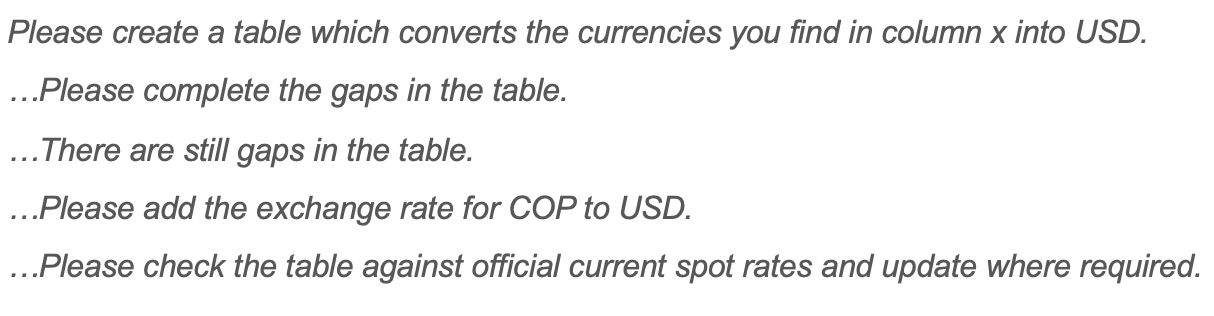

ChatGPT has public data research built-in (of course), so I was able to research current fx rates and convert the numbers I had into one currency

It can actually generate graphs from the data - I was not aware of this before I tried it

What does not work well

In practice, I encountered several issues:

It makes obvious and non-obvious mistakes

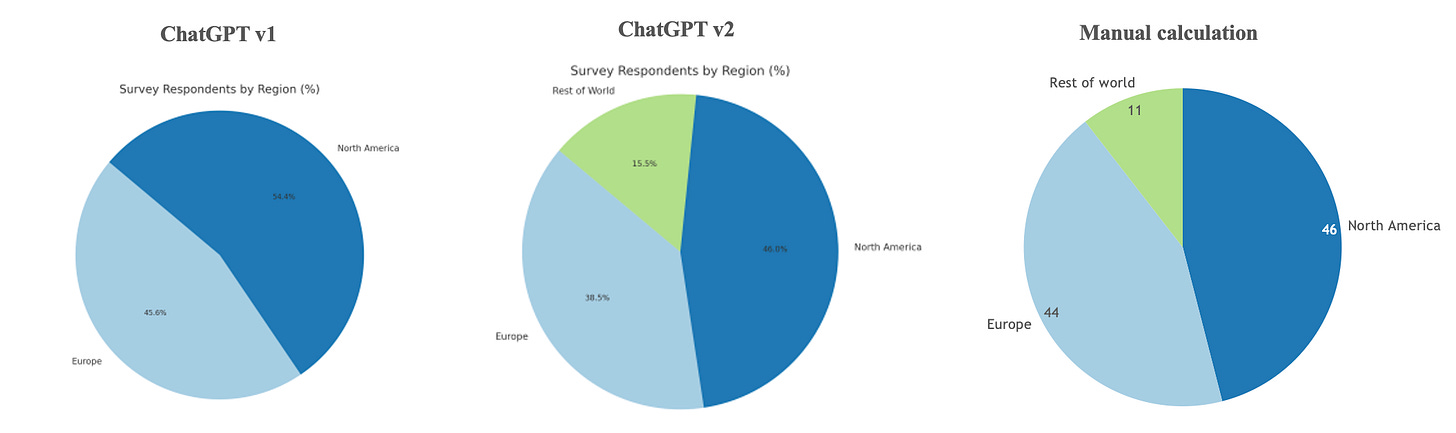

I asked ChatGPT to create a breakdown of the data in a more aggregated form (“Give me a breakdown by region”). The first output was obviously wrong, which I then corrected (“You forgot rest of world”). Unfortunately, even the corrected output was still incorrect - as you can see by comparing it with my manual analysis.It performs badly on data classification

The result above is just one example. I tried to generate metadata by adding categories to the data dimensions. The categories that ChatGPT suggested were useless. To fix this, I gave it categories and asked it to assign dimensions to those categories for me. It returned complete nonsense - a high school graduate could have done better.It is impossible to verify correctness

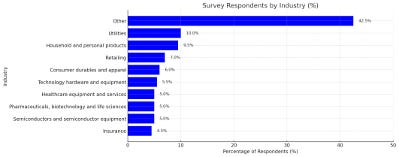

ChatGPT uses Python to do the analysis. Unfortunately, this is a black box, making it difficult to detect errors. One example: “Give me a breakdown of the data by industry. Summarize all smaller industries into "Other", show max of 6-10 industries.”

The problem is unfortunately very subtle. In this particular data set there are 4 industries with a share of 4.5% of the data. ChatGPT randomly shows one of these four (insurance), hiding the other 3 into “Other” because the prompt “forced it” to show a maximum of 10 lines. I would not have spotted this issue if I had not redone the analysis manually.It takes significant effort

It frequently requires multiple prompts to make ChatGPT do what you want. Here’s an example, where it took me 5 prompts to get to the result.Using outputs is cumbersome

Let’s face it: The graphs that ChatGPT produces don’t look professional and ignore common sense design practices. Just take a second look at the screenshot of the country breakdown above, which has overlapping labels. And improving graphs via prompts is a pain, too.

Furthermore, using ChatGPT results in xls is not easy either. You have to import a CSV file with formatting issues that I had to fix afterwards.

LLMs are often described as having a university graduate assistant, who lacks industry understanding. In this experiment, it frequently felt like working with a 10-year-old assistant, who knows advanced statistics and Python programming, but who needs every single step explained and lacks common sense understanding of the data.

What does this mean…

… for doing financial analysis with LLMs like ChatGPT?

It is not universally useful

ChatGPT works well on simple analysis, but that analysis is done much faster and better in xls or Tableau or Alteryx. Even if ChatGPT fixes some of the above issues, it will not be the right tool for every job.

In my view, the most promising use cases, where ChatGPT might be useful are “data cleaning” and “Python coding for advanced statistical analysis”. I have tested the first use case myself. We know that ChatGPT is great at coding, so it should also work well for data scientists in finance.It does not work well off-the-shelf

ChatGPT is a technology that needs a wrapper, an application to make it work well for data analysis for the everyday controller. The application will ensure that the data is properly understood, that the LLM asks clarifying questions if the natural language input is vague, and that the (graph) outputs adhere to common best practices.

Maybe OpenAI will integrate the wrapper into ChatGPT itself, but I think a separate FinancialAnalysis.ai application is more likely.It needs a human pro

It becomes clear that the expert in the loop will remain essential… to understand the data, to ask the right questions, to check the validity of the statistical methods, and to check the plausibility of the results.

Practical takeaways for finance executives

Use ChatGPT for tasks that you cannot do in xls/Tableau/Alteryx

(e.g., data cleaning and Python coding for advanced data analysis)Ensure there is an expert in the loop - at this point, it’s not an everyday controller tool

Verify ChatGPT results. Always.

What is your experience when using ChatGPT for data analysis?

Sebastian